Quality benchmarks

Quick Navigation

- What are Meeting Quality Benchmarks?

- When this is useful

- How it works (high level)

- How to create Quality Benchmarks

- Writing effective benchmarks

- Where you see results in a summary

- Concrete example benchmarks

- Troubleshooting & tips

- Next steps

1. What are Meeting Quality Benchmarks?

Meeting Quality Benchmarks let you define the conversation elements that must occur in a meeting (e.g., confirm next steps, handle pricing objection, ask for a satisfaction score).

Sally then analyzes each matching meeting and shows whether those elements happened, with time‑stamped references so you can jump straight to the relevant part of the transcript.

The goal is consistent, professional conversations across your team—without manual checklists or tedious reviews.

2. When this is useful

- Sales & presales: Ensure discovery frameworks (e.g., budget/timeline/need) are covered, and that common objections are handled.

- Customer Success: Verify that next steps and owners were agreed, or that a health check and renewal risks were discussed.

- Hiring & HR: Check that interviewers explained the role, asked core questions, and outlined next steps.

- Project & delivery: Confirm that scope, success criteria, risks, and responsibilities were clarified in kickoffs and reviews.

- Compliance & privacy: Make sure required notices were read aloud and consent was recorded.

3. How it works (high level)

- You create one or more benchmarks (also called objectives)—each is a clear requirement phrased as a question or checklist item.

- You decide where they apply (all meetings or selected meeting types, e.g., Onboarding, HR Meetings or Dailies).

- Sally analyzes transcripts of matching meetings and surfaces:

- a pass/fail (or not found yet) per benchmark,

- one or more timestamps showing where the topic was addressed,

- short evidence snippets to quickly review context.

Detection quality depends on transcription quality (mics, crosstalk, language). Keep benchmarks specific and unambiguous.

4. How to create Quality Benchmarks

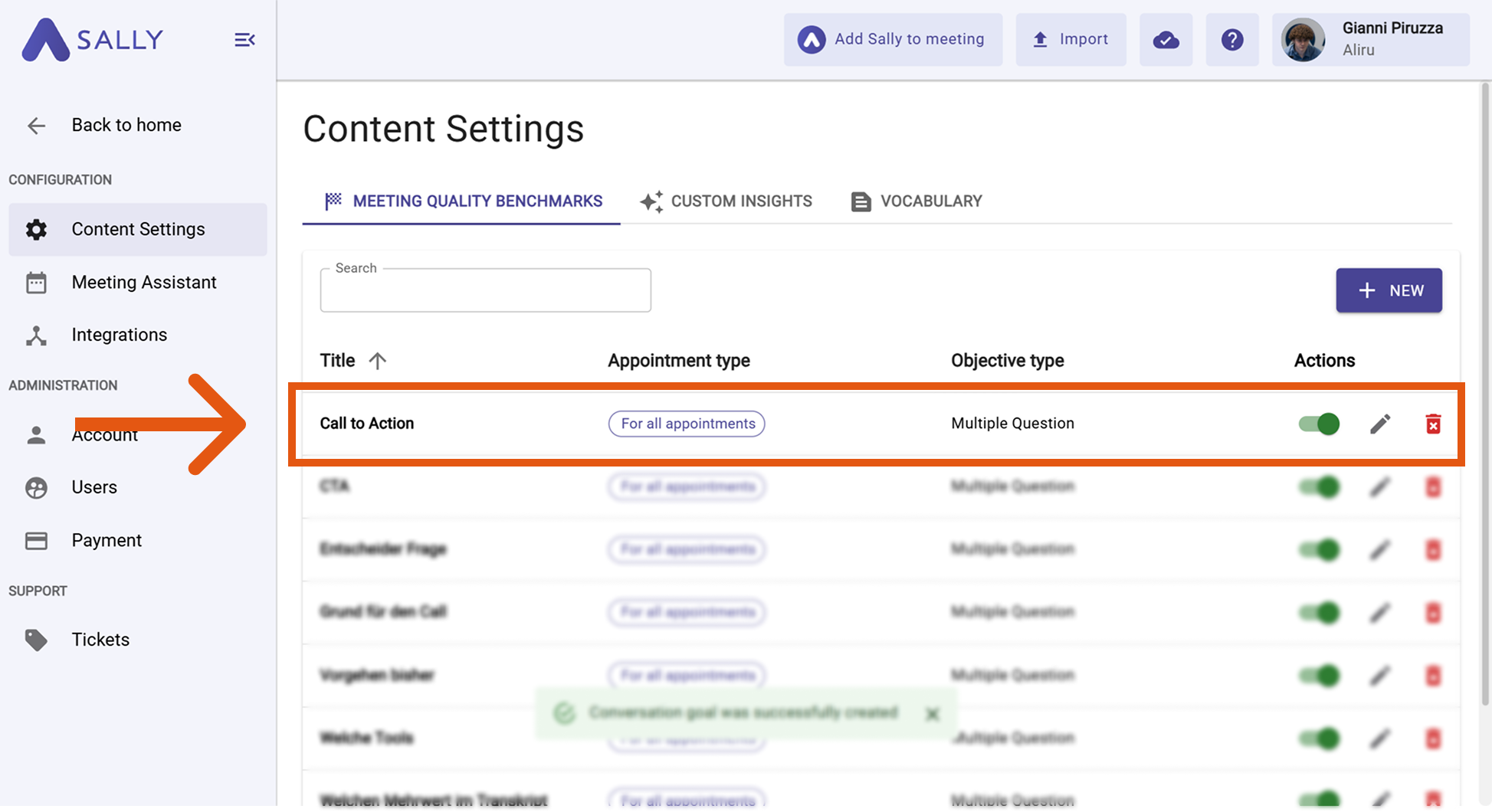

You configure benchmarks in "Content Settings":

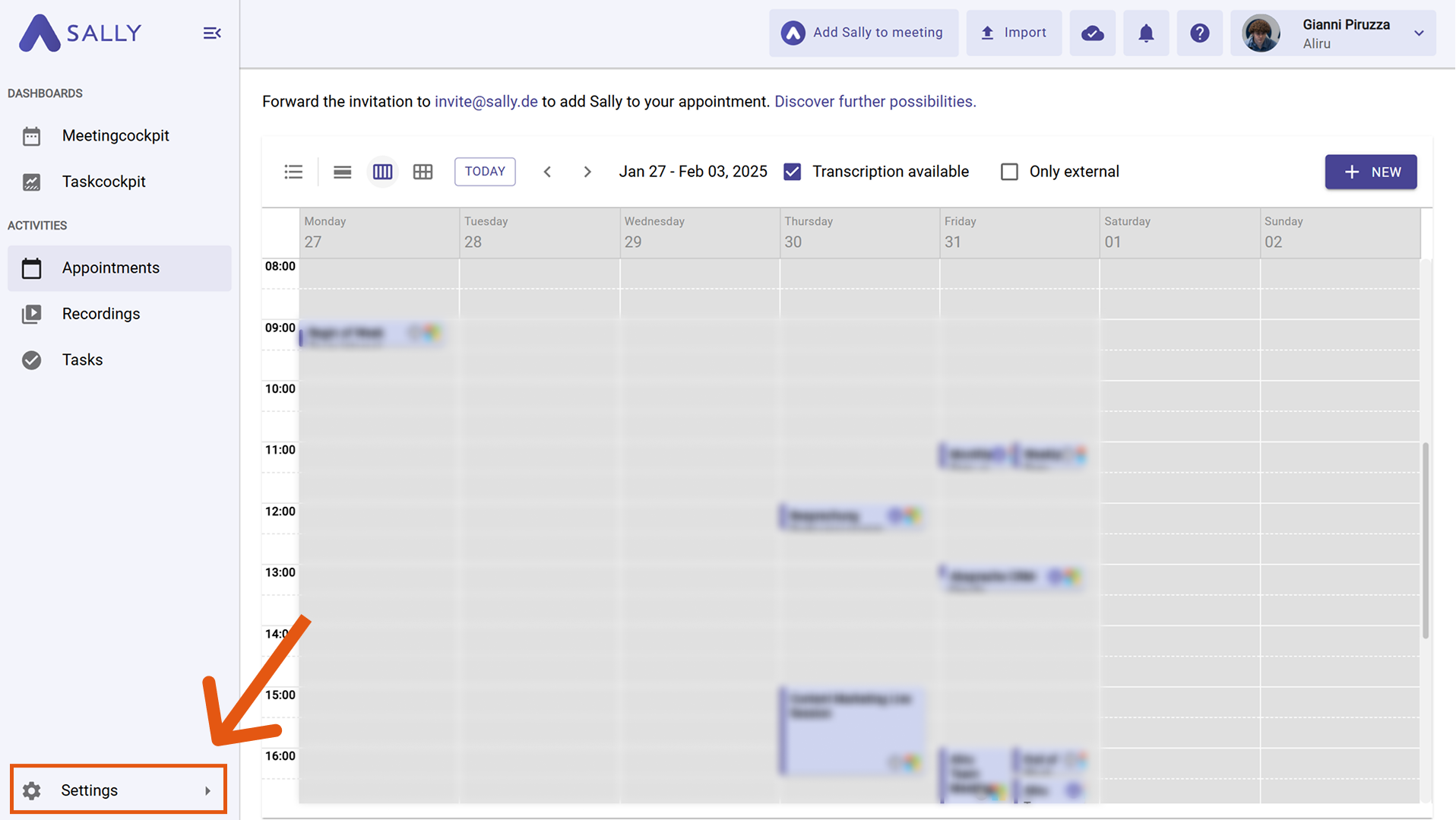

- Open Settings from the left navigation.

Figure 1: Go to Settings

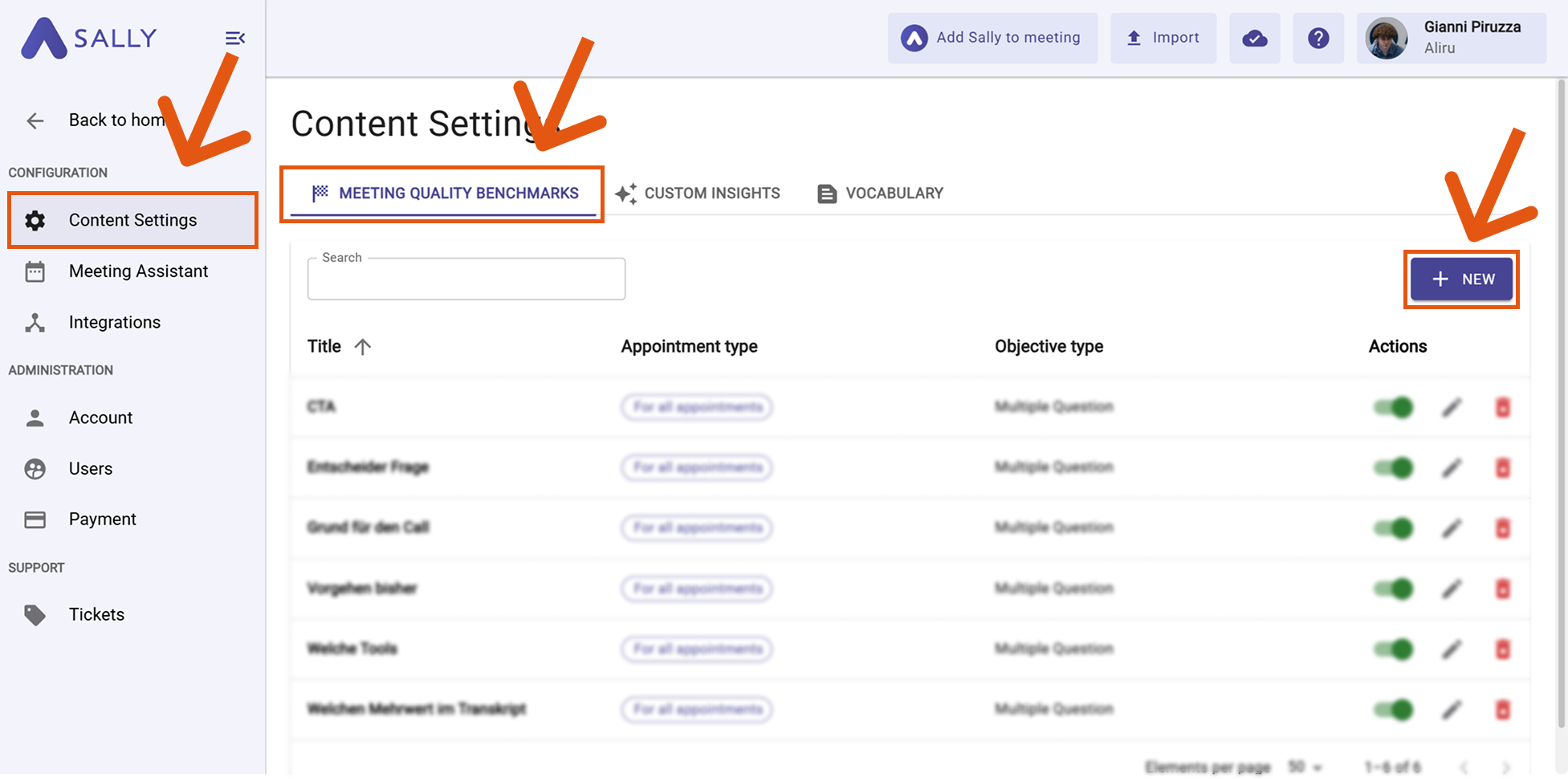

- Go to "Content Settings".

- Open the tab "Meeting Quality Benchmarks".

- Click "+ New" to create a benchmark (objective).

Figure 2: Create new Quality Benchmark

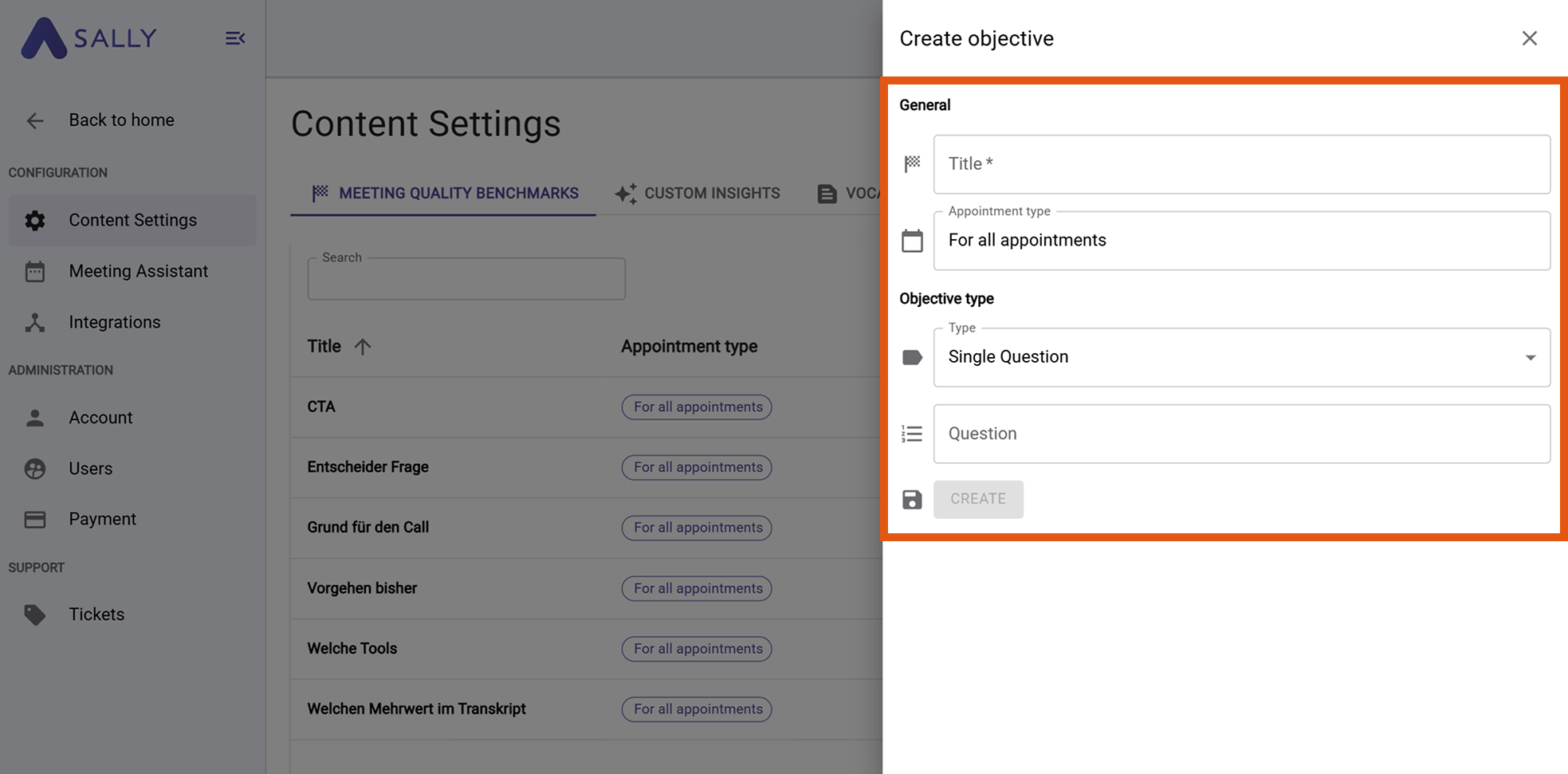

- A window will now open where you can set the settings for your quality benchmark:

Figure 3: Quality Benchmark Settings

Here you can configure the following settings:

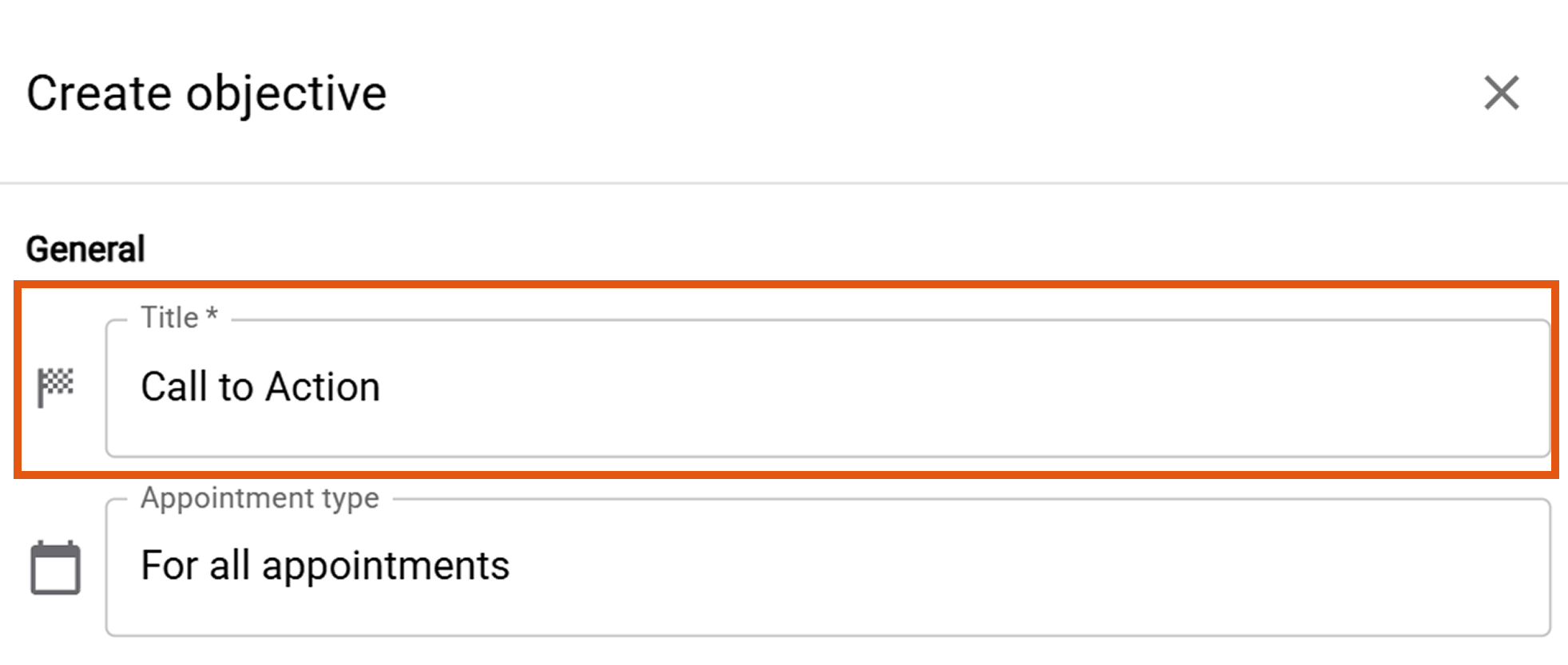

4.1 Title

Select a clear name for the benchmark (e.g., “Call to Action” or “Pricing objection handled with ROI”).

Figure 4: Select Title

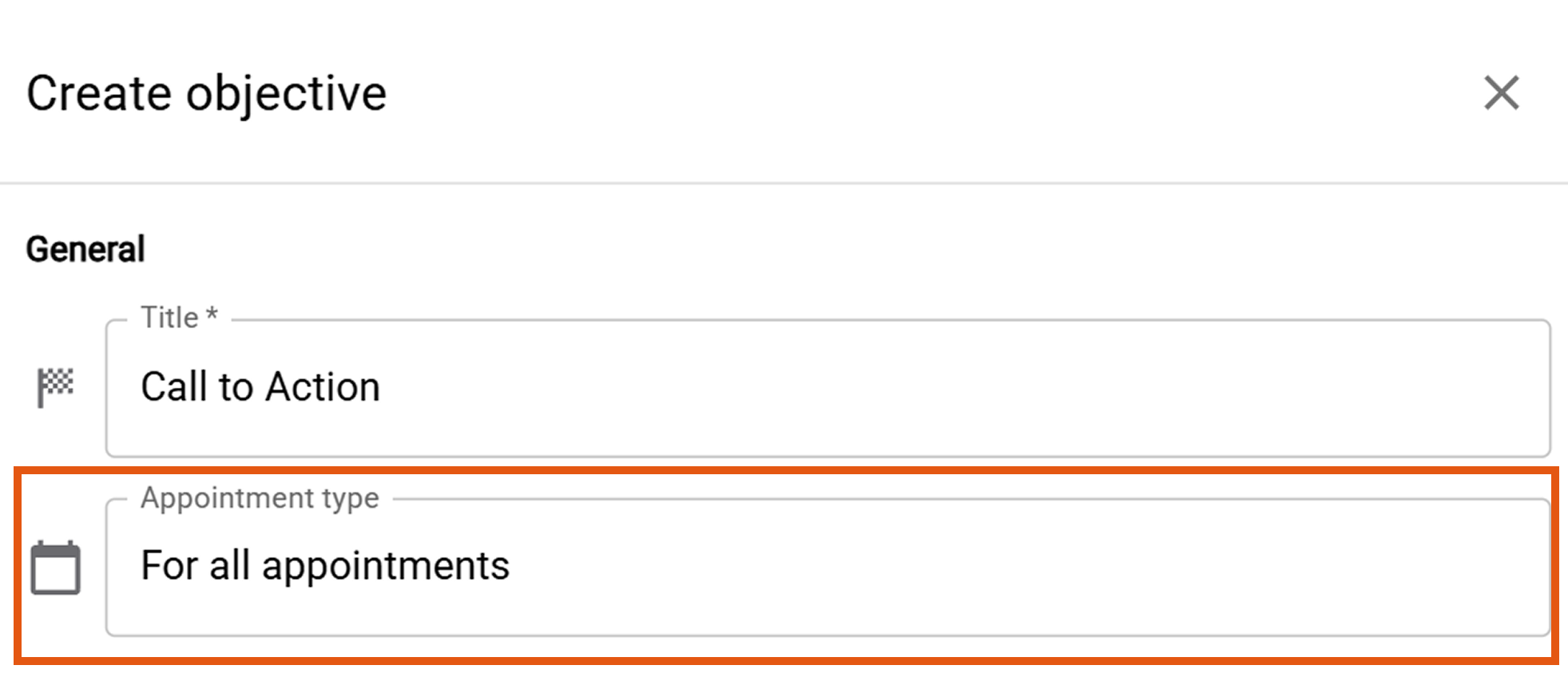

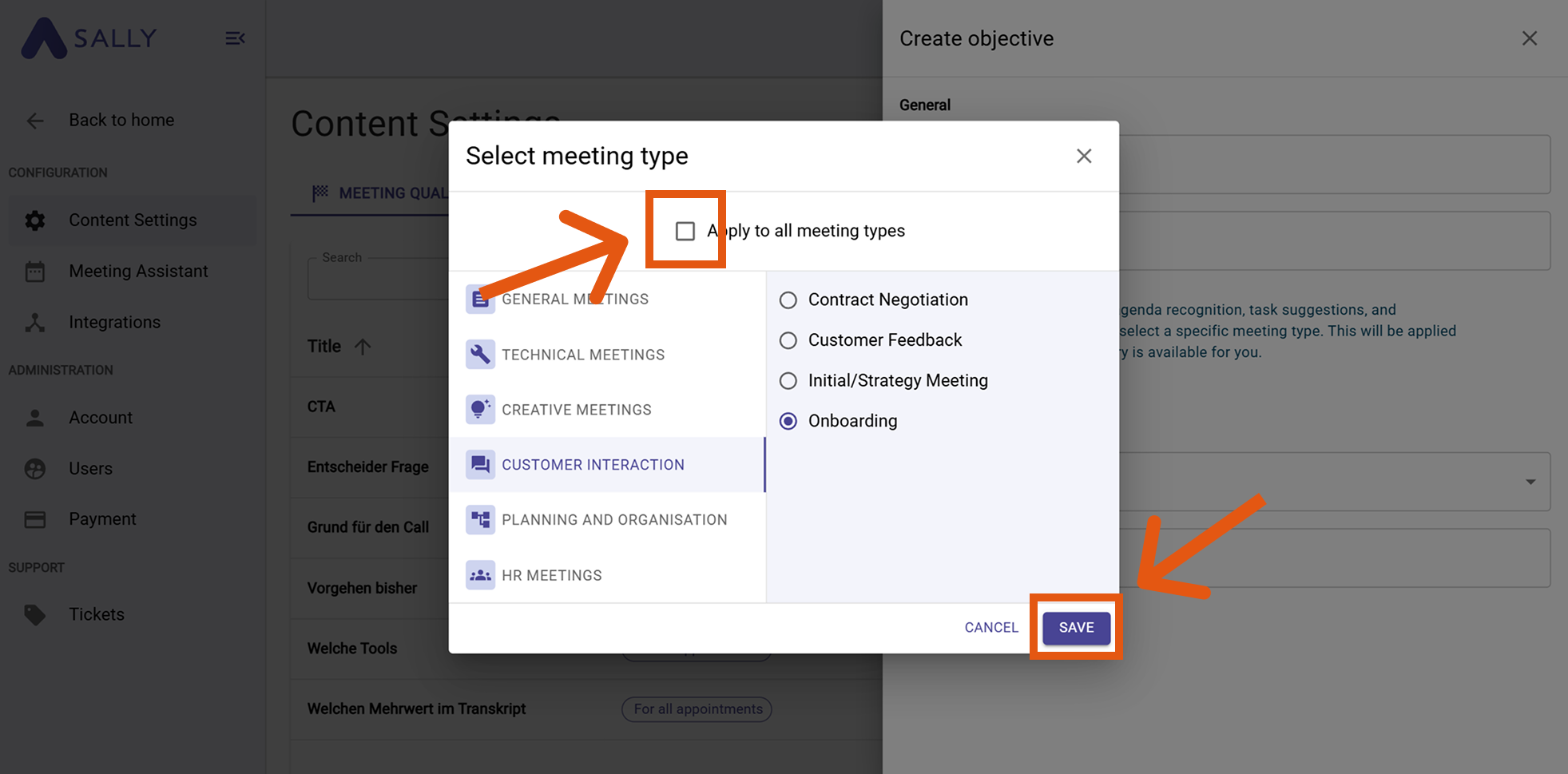

4.2 Appointment/Meeting type

Apply to all appointments or select a specific meeting type (e.g., HR Meeting, Onboarding or Qualification Call).

This ensures you only evaluate meetings where the benchmark is relevant.

Figure 5: Choose Meeting Type

If you want to select a meeting type, make sure that the “Apply to all meeting types” button is turned off. Make sure to save your selection.

Figure 6: Select Meeting Type

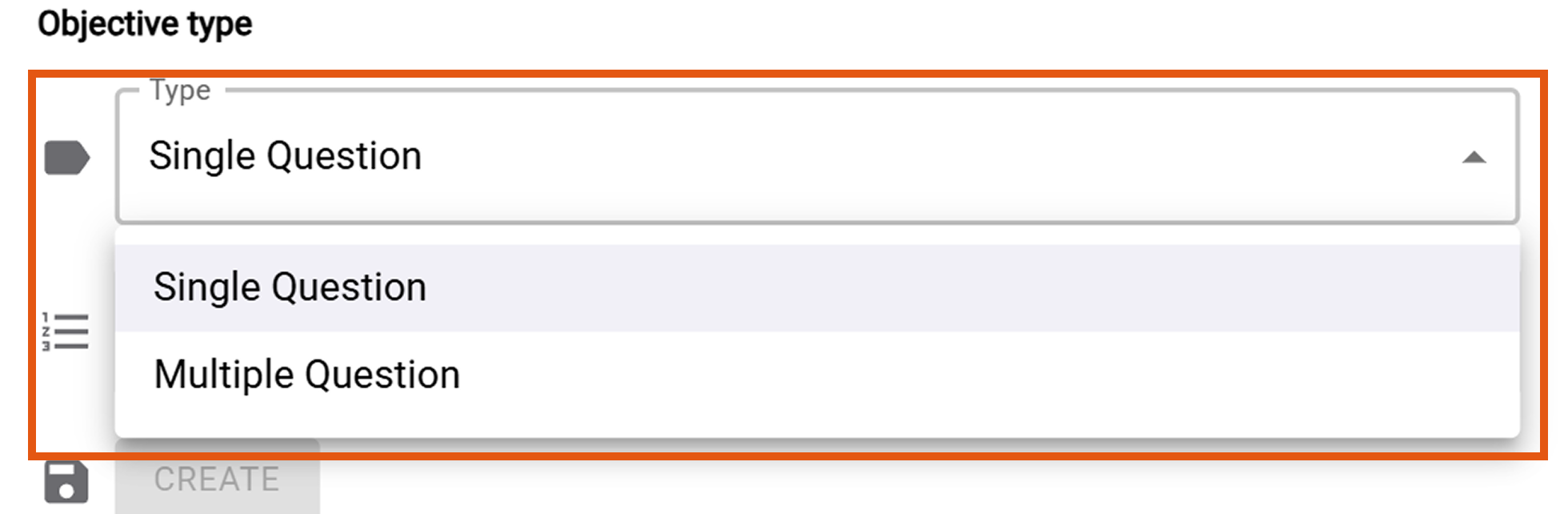

4.3. Objective type

Now select the "Objective type".

Figure 7: Choose Objective Type

4.3.1. Single Question

In this type, you define one specific question that should appear in the meeting.

Sally checks if this exact phrase is found in the transcript.

Example: “Did we cover all agenda points?”

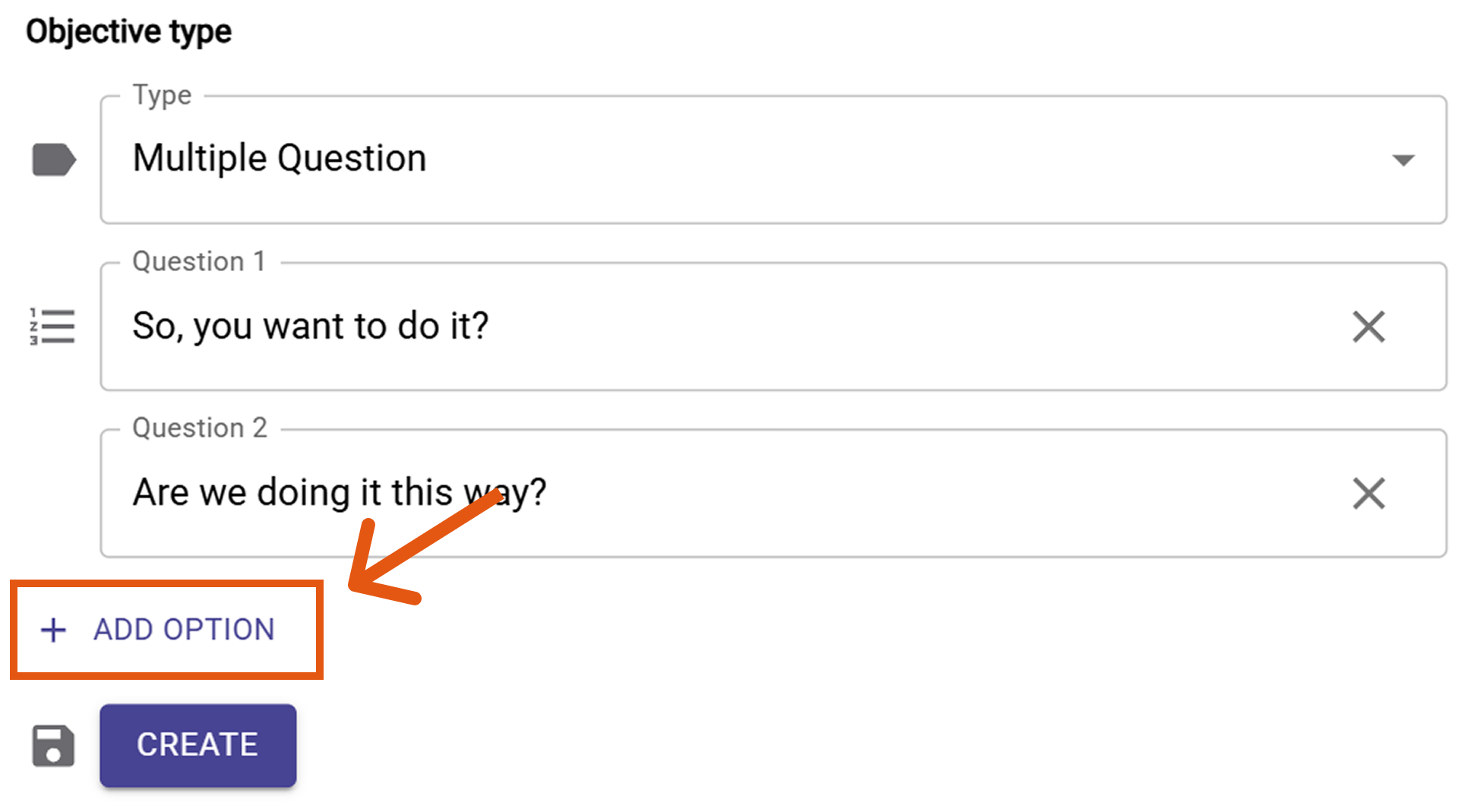

4.3.2. Multiple Question

Use this when the same intent can be expressed in different ways during a meeting.

Confirming agreement could be expressed as:

- “Shall we go ahead with this?”

- “Do we all agree to move forward?”

- “Are we aligned on doing this?”

- “So, are we doing this?”

- “How are we looking on that?”

You can list each variation as its own “question,” by simply clicking on the "Add Option" button. The benchmark will be counted as met if any of them appear.

Figure 8: Select multiple phrases

This increases detection accuracy, since people rarely use the exact same wording every time.

After creating the quality benchmark, click "Create" to save it. The new benchmark will now appear in your benchmarks list:

Figure 9: List of your Benchmarks

5. Writing effective benchmarks

These are best practices to make your benchmarks as clear and detectable as possible:

- Phrase naturally: Write the benchmark exactly as someone would say it in the meeting. Avoid unnatural wording that nobody would actually use in conversation.

- Be specific: “Did we confirm the next step and assign an owner?” is better than “Was the meeting effective?”

- One requirement per question: Avoid compound sentences.

- Use the meeting type filter: Don’t apply sales checks to HR interviews.

- Limit the number: 3–7 items per meeting works well—enough to guide, not overwhelm.

- Test on past recordings: Create the benchmark, preview on a few recordings, then refine the phrasing.

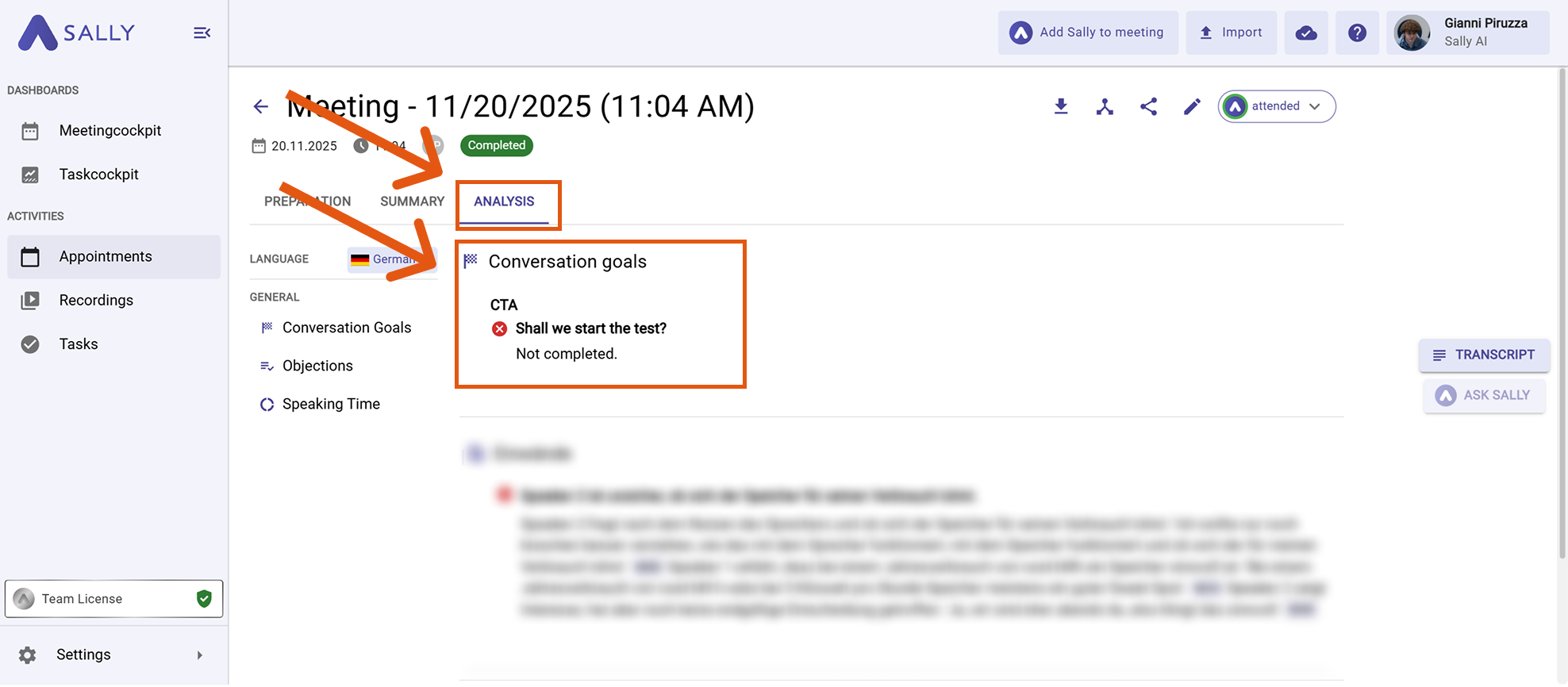

6. Where you see results in a summary

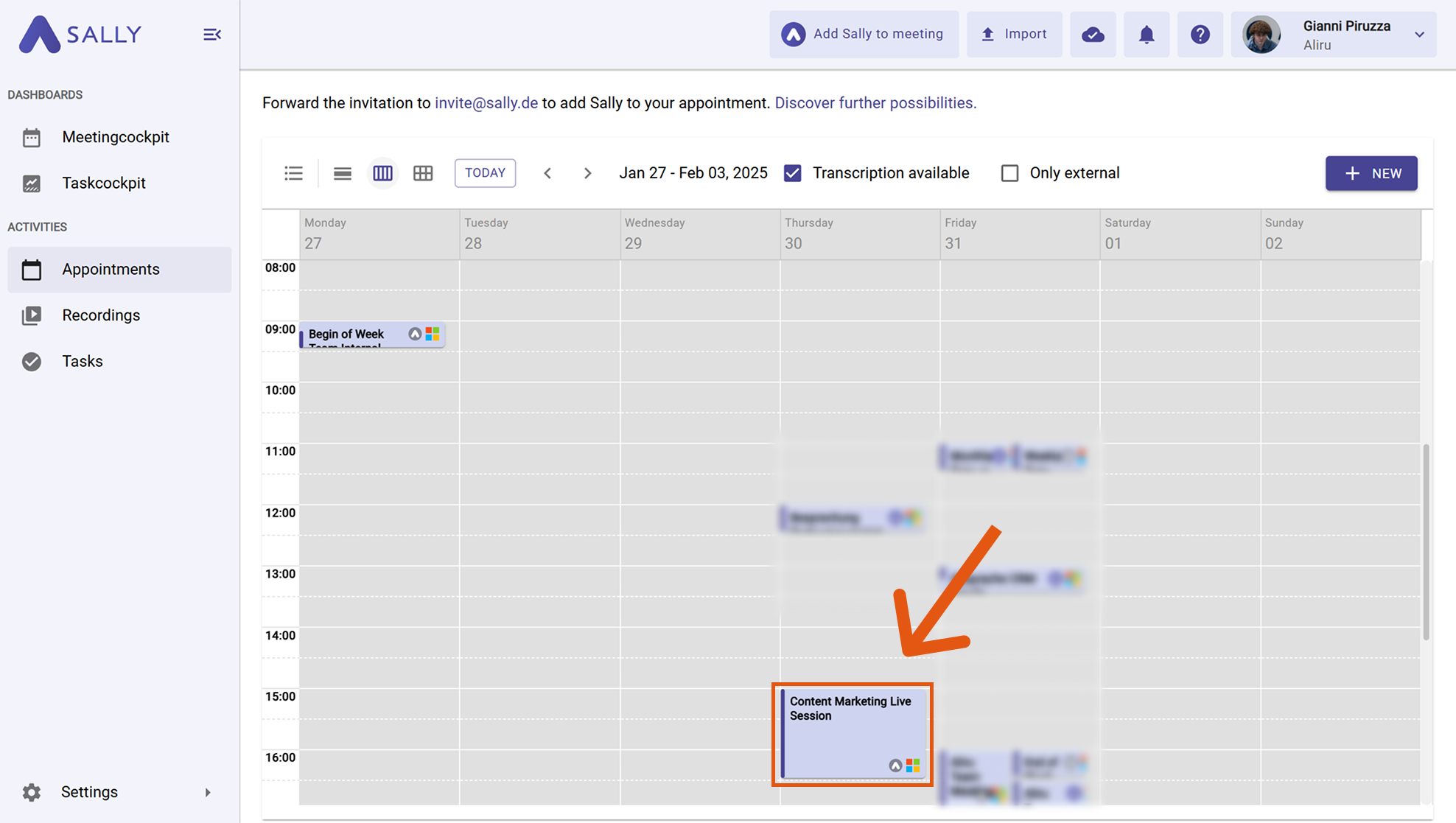

- After a meeting is processed, open the Appointment.

Figure 1: Go to your meeting

- Go to the section called "Sales Analytics".

Figure 2:

For each benchmark you’ll find:

- a status (e.g., Found / Not Found),

- one or more timestamps that link to the exact spots in the transcript,

- a short evidence snippet to review quality quickly.

7. Concrete example benchmarks

7.1 Sales objection handling

- Benchmark (Single Question): “If the customer raises a price objection, did the rep respond with ROI or total cost of ownership?”

- Why it helps: Ensures reps follow the agreed argumentation strategy when “too expensive” comes up.

7.2 Customer Success check‑in

- Benchmark (Multiple Question; variations for each requirement):

- “Did we confirm the customer’s current goal or desired outcome?”

- “Did we agree on a next step with an owner and due date?”

- “Did we ask for a quick satisfaction rating or sentiment?”

- Why it helps: Keeps every check‑in consistent and action‑oriented.

7.3 Hiring interview basics

- Benchmark (Single Question): “Did the interviewer explain the role expectations and next steps to the candidate?”

- Why it helps: Improves candidate experience and standardizes interview flow.

7.4 Project kickoff essentials

- Benchmark (Multiple Question; variations allowed):

- “Did we define scope and success criteria?”

- “Did we identify risks or dependencies?”

- “Did we align on responsibilities and timeline?”

- Why it helps: Ensures clarity from day one and reduces rework.

7.5 Privacy & compliance

- Benchmark (Single Question): “Was the meeting privacy notice presented at the beginning?”

- Why it helps: Documents compliance in regulated environments.

8. Troubleshooting & tips

- Benchmark doesn’t appear in a summary: Check that it’s enabled and that the meeting type matches the benchmark’s scope.

- Detection seems inconsistent: Simplify wording; avoid two requirements in one sentence; improve audio quality (mic close to speaker, reduce echo, avoid talking over each other).

- Language mix: Keep a single language per meeting where possible; mixed languages can reduce detection accuracy.

- Too many benchmarks: Start small and iterate—better to enforce a few critical behaviors well.

9. Next steps

- Create 2–3 high‑impact benchmarks per meeting type (e.g., sales discovery, onboarding, interview).

- Test on a handful of past recordings and refine phrasing.

- Roll out to the team and use results for coaching and QA reviews.